How To Read and Evaluate A Scientific Paper: A Guide for Non-Scientists

AKA everything I learned about scientific papers while getting my MPH.

Source: Evergreen Valley College Library

Becoming a healthcare expert is a journey, not a destination. The pursuit of knowledge in our field is never-ending. And with an ever-expanding body of research and emerging technologies, we continually face new learning opportunities. This means constantly trying to gather as much evidence as possible to understand the problem and form opinions. Reading the headlines is one thing, but reading a study yourself is a great way to dive deeper into a topic.

So how can we non-scientists discern the legitimacy of a study? How can we confidently browse the latest in DIGITAL HEALTH (a peer-reviewed open-access journal that focuses on healthcare in the digital world)? Journal articles can feel super foreign. That’s because these articles are written in scientific language and jargon specifically intended for an audience familiar with the topic, research methods, and terminologies. But the good news is that you can develop fluency in deciphering these data-driven narratives.

In this article, I’ll share some of the things I learned to help me gain confidence in reading journal articles and know when to be receptive vs. when to be skeptical. So, let’s dive in…

Where to find scientific studies

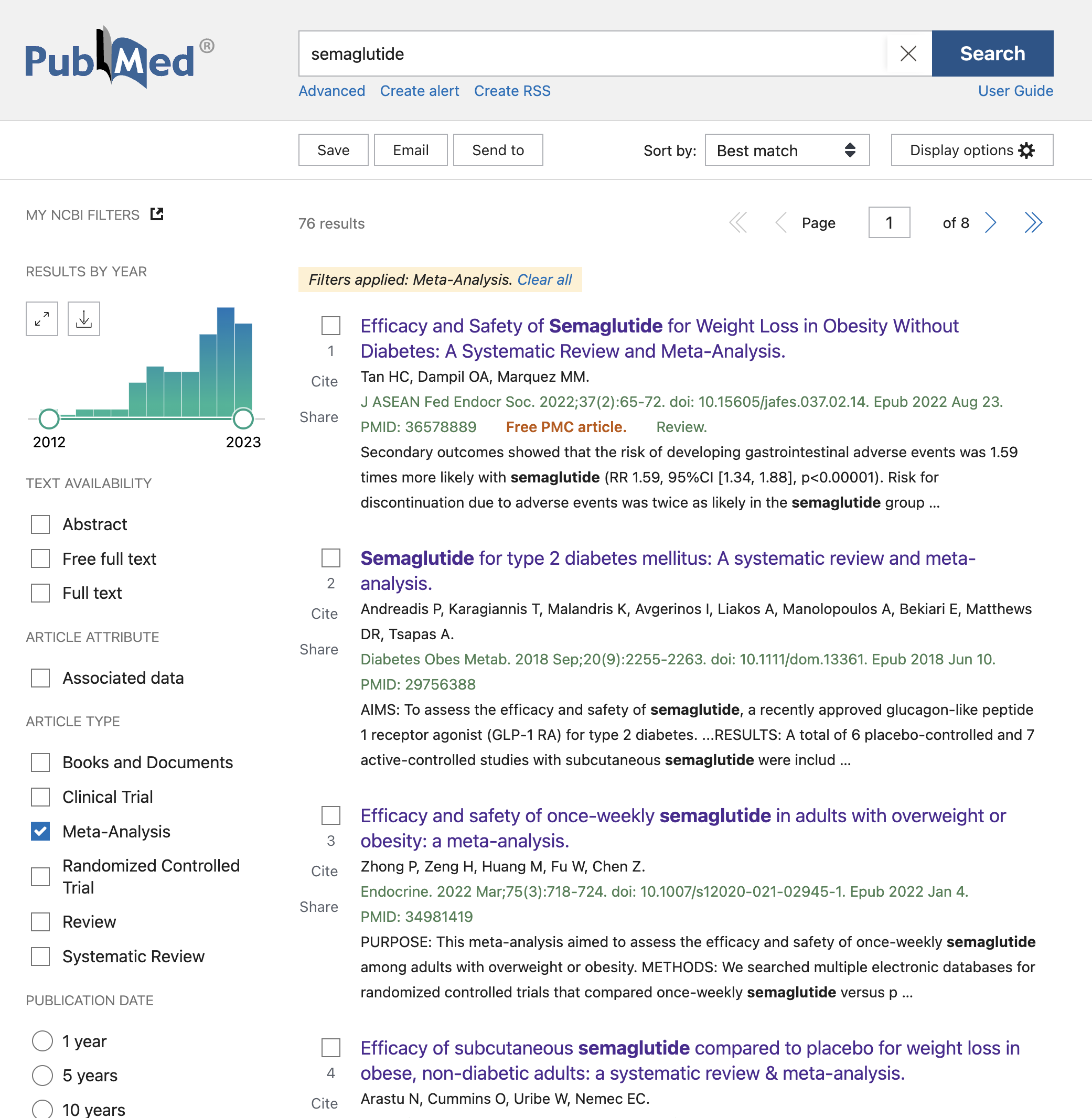

There are numerous online databases that aggregate scientific articles from various fields. Some of the most widely used include PubMed (largely medical), Google Scholar (across disciplines), IEEE Xplore (engineering and technology), and arXiv (for physics, mathematics, computer science, and more).

I always start with PubMed. Let’s say you’re looking at a startup prescribing GLP-1s (semaglutide) for obesity and want to know if this is even a legit drug. I would search semaglutide and start with any meta-analyses (I’ll explain more on that later). In this case, there are 76 results.

Some results will show the full text, but more often than not, you only get the abstract. I don’t know if I should be admitting this publicly, but at this point I usually go to SciHub to read the full text. SciHub is a controversial project that has “revolutionized science by making all paid knowledge free”. In my mind, our tax dollars have helped fund these studies so the results should be open to all.

How to read a scientific paper / journal article

Research papers follow a pattern you might get to know as the IMRAD format. This stands for: Introduction, Methods, Results, and Discussion. Of course you'll also find things like tables, cross-references, and extra goodies like data sets and lab protocols.

You don’t have to read the paper from top to bottom, it’s okay to hop between sections, cross-reference data, or revisit certain passages for deeper understanding. The Discussion section often provides the interpretation of the results in the context of the broader field, while the Introduction sets the stage, telling you why the study was conducted. I often start with the conclusion and fill in my knowledge from there.

Make sure the study is credible before wasting your time

But before you spend too much time reading a paper, there are ways to skim it and know if it’s worth your time. A study's credibility is often closely linked to who conducted it, what it entails, and where and when it's published.

Who: credible researchers and institutions

The integrity and expertise of the researchers and the reputation of their institutions can provide a gauge for the study's trustworthiness. Be cautious of studies by unqualified researchers or obscure institutions. And don’t forget that even the fanciest institutions have researchers who fabricate findings.

What: detailed methodology

The "Methods" section of a study is where the researchers explain what they did. It should clearly state their hypothesis, the steps taken to test it, and the tools used for data analysis. If this section is vague or missing, consider it a red flag.

Where: peer-reviewed journals

Most reputable studies are published in peer-reviewed journals, where other experts have scrutinized the study for its methodology, results, and conclusions. Research published elsewhere, such as blogs or websites, should be taken with a grain of salt.

When: recency of the study

The timing of a study's publication can determine its current applicability. Research in rapidly advancing fields can quickly become outdated, losing relevance as newer insights and technologies emerge. A study conducted 15 years ago might not reflect today's realities or best practices, but it ultimately depends on the subject.

The type of study matters

Now, perhaps the most important thing to look at: study design. The type of study conducted can tell you a lot about the quality of the results.

Systematic Review and Meta-Analyses

Both systematic reviews and meta-analyses aim to synthesize and make sense of the existing body of scientific studies on a particular topic. These can provide a robust overview, but their reliability hinges on the quality of the included studies (and sometimes, there’s just not a lot of research on a topic).

A systematic review involves a detailed and comprehensive plan and search strategy derived to minimize bias and ensure that all relevant research is considered. The researchers search for, select, appraise, and synthesize research evidence relevant to a particular question. The findings are then qualitatively analyzed. Systematic reviews aim to provide a complete overview of current literature relevant to a research question. The strength of a systematic review lies in the transparency of the methodology used, which should be rigorous and replicable.

A meta-analysis is a statistical technique for combining (or "pooling") the data from multiple studies that are included in a systematic review. This method increases the overall sample size and can thus provide greater statistical power, leading to more robust point estimates than individual studies. It quantitatively analyzes the results of similar studies to find common trends or outcomes, effectively creating a 'study of studies'. Accuracy of the findings of a meta-analysis are dependent on the quality and homogeneity of the included studies.

In essence, a meta-analysis can be thought of as a type of systematic review. However, not all systematic reviews contain meta-analyses. If the results of the individual studies cannot be combined to produce a meaningful and reliable pooled effect estimate, a systematic review may still provide a valuable qualitative summary of the research.

There’s an international, independent, not-for-profit organization, Cochrane, that produces high-quality systematic reviews of medical research findings in healthcare known as Cochrane Reviews. These reviews are internationally recognized as the highest standard in evidence-based healthcare resources. Here’s an example of a review on Echinacea for preventing and treating the common cold.

Randomized controlled trials (RCTs)

Considered the gold standard in research, RCTs involve randomly assigning participants to either the control group or the experimental group. This design helps eliminate bias and ensures that any observed changes can be attributed to the experimental treatment.

But the design, implementation, and monitoring of these trials are costly and time-intensive. Furthermore, the very process that gives RCTs their strength—the randomization—can sometimes lead to ethical concerns, like randomly assigning people to smoke.

And sometimes, RCTs are simply not possible. For instance, if the subject of study is a rare condition, recruiting a sufficient sample size can prove to be insurmountable. Or, in cases where the effects of an intervention might only become manifest over a very long period, an RCT might be unviable.

So, while RCTs remain the epitome of scientific rigor, they unfortunately remain shackled by financial and practical constraints.

Observational studies

This broad category involves monitoring participants without intervening, and includes several subtypes:

Cohort studies: In these studies, two groups (cohorts) are compared. One group is chosen because they were exposed to a particular risk factor (like smoking), while the other isn't. Both groups are tracked over time to see how many individuals develop a particular outcome (like lung cancer). Cohort studies are good for determining the prevalence of an outcome over time and identifying potential risk factors.

Case-control studies: This approach begins with people who already have a disease (cases) and those without it (controls). Researchers then look back retrospectively to identify exposure to specific risk factors. Case-control studies can be used to establish a correlation between exposures and outcomes, but cannot establish causation. They are often used when studying rare diseases or outcomes.

Cross-sectional studies: In these studies, researchers assess the prevalence of an outcome in a population at a specific point in time. They're often used to identify correlations, but since they provide a snapshot rather than a movie, they also can't determine causality. They are often used to measure the prevalence of health outcomes, understand determinants of health, and describe features of a population.

Case series

Case series (also known a case report or case study) involve detailed reports on a series of patients, all undergoing the same treatment or with the same condition. They're often used in medical research to document novel cases, but the absence of a control group limits the ability to infer causality. Case series are fun to read, but can't always be generalized to the broader population.

Diving deeper: evaluating study design

Okay so now you know the types of studies, and their pros and cons. Let’s go a little deeper and talk about how we can pick apart an individual study.

Sample size

A larger sample size increases the statistical power of the study, making it more likely to detect a true effect if one exists. A study with a small sample size may not have enough power to draw reliable conclusions. Take for example, this study investigating whether the supplement CoQ10, along with a “mountain spa rehabilitation” would help patients better recover from COVID-19 symptoms. While they found “accelerated recovery” amongst study participants who took CoQ10 in a mountain spa, the study only included 36 patients… so more research is needed to draw a conclusion.

Replication

Science relies on the ability to replicate findings. If a study's results have been replicated by other researchers, it lends more credibility to the findings. Replication is a massive problem in science. A 2016 paper in Nature reported that more than 70% of researchers have tried and failed to reproduce another scientist's experiments, and more than half have failed to reproduce their own experiments.

Bias and confounding

Researchers should take steps to minimize bias and control for confounding variables. Any potential sources of bias or confounding should be discussed in the study.

Conflict of interest

It's important to check if the researchers have any conflicts of interest, such as financial ties to a company that could benefit from the study's results. The question is: who paid for the study? This is usually listed in the Disclosures section towards the end.

Consistency with other research

Findings that are consistent with a body of previous research are more likely to be reliable than those that contradict well-established results without a good explanation.

Interpreting results

It’s one thing to read the paper’s conclusion. It’s another to look at the results and determine the take-aways yourself. Here are some tips for reading the “results” section of a paper.

Statistical significance

If a result is statistically significant, it implies a low probability that the observed outcome occurred by chance alone. However, it's essential to recognize that statistical significance doesn't necessarily translate into practical or scientific importance. A result might be statistically noteworthy but lacking in real-world relevance.

The p-value is the numerical measure that tells you how likely the observed results of an experiment would be, assuming that the null hypothesis (a statement of no effect or no difference) is true. It's a tool to help assess the evidence against the null hypothesis.

A small p-value, typically less than 0.05 (the rejection level), is often considered evidence that the results are statistically significant. Conversely, a large p-value suggests that the evidence is not strong enough to reject the null hypothesis.

Correlation vs. causation vs. effect modification

Distinguishing between correlation (when two variables change together), causation (where one variable induces change in another), and effect modification (when the effect of one variable on another is different across levels of a third variable) is helpful. Correlation merely indicates that two variables move together, but it doesn't explain why. Causation delves deeper, asserting that one variable is responsible for changes in the other. Effect modification adds another layer of complexity by revealing how a third variable might alter the relationship between the first two. Falling into the trap of confusing correlation with causation, or overlooking effect modification, can lead to misleading conclusions. Scrutinizing the methodology and considering all three aspects — correlation, causation, and effect modification — ensures that conclusions drawn are well-founded and that the intricate dynamics between variables are fully appreciated.

Generalizability

As discussed above, a large sample size can lend more credibility to a study, but it's equally vital to assess whether the sample represents the broader population. A large but biased sample might produce unreliable conclusions.

Examine graphs and tables

Visual aids can be a concise way to represent complex data, but it's essential to interpret them with caution. Look at the scales, legends, and underlying assumptions behind these visuals. They often contain nuances that are crucial to understanding the results accurately.

Contextulize

Consider the results in light of previous studies and the broader field. Consistency or inconsistency with existing literature can provide deeper insights into the implications of the findings.

Putting it all together

Now that you've dissected the study, it's time to step back and look at the whole picture. Do the researchers' conclusions align with the data presented? Do they acknowledge the study's limitations and potential biases? Are there conflicts of interest, such as financial funding from biased sources? Your evaluation should also consider the relevance of the study to the broader field, and how it fits within the existing body of literature.

Understanding and evaluating studies isn't a skill developed overnight. But with time it gets easier, and even more enjoyable. I wish you the best on your paper reading pursuits!

Scientific study glossary

Abstract: A brief summary of a research article, including its purpose, methodology, results, and conclusions, providing an overview of the study's content.

Bias: Systematic errors in the design or conduct of a study that lead to inaccurate or misleading conclusions.

Blinding: A method used to prevent bias by concealing the allocation of treatments from participants, investigators, or both.

Causation: A relationship where a change in one variable is responsible for a change in another.

Confounding variable: An external variable that is associated with both the independent and dependent variables, creating a false impression of a relationship between them.

Control group: A group in a study that does not receive the experimental treatment, serving as a comparison to evaluate the treatment's effect.

Correlation: A statistical relationship between two variables, indicating that they tend to change together, without implying a cause-and-effect relationship.

Dependent variable: The outcome variable that researchers are interested in explaining or predicting.

Effect modification: A situation where the effect of one variable on another varies across levels of a third variable.

External validity: The extent to which the results of a study can be generalized to other populations, settings, or times.

Hypothesis: A testable prediction or statement about the relationship between variables that can be tested.

Independent Variable: The variable that is manipulated or categorized to observe its effects on the dependent variable.

Internal validity: The degree to which a study's design and conduct allow for an accurate conclusion about the relationship between the independent and dependent variables.

Peer review: A process in which other experts in the field evaluate a manuscript for its quality, validity, and relevance before publication.

P-value: A statistical measure used to evaluate the evidence against a null hypothesis, representing the probability of observing the results (or more extreme) assuming the null hypothesis is true.

Replication: The act of repeating a study using the same methods to verify the original findings.

Sample size: The number of observations or participants included in a study.

Statistical significance: A statistical assessment of whether an observed effect is likely to be genuine or whether it could have occurred by random chance, often evaluated using a predetermined significance level (e.g., 0.05).